Blog | Retrieval Augmented Generation Explained

Overview

Retrieval augmented generation (RAG) is a transformative architecture for generative AI, combining large language models (LLMs) with external knowledge retrieval to produce more accurate, grounded, and contextually relevant responses. As organizations push to embed generative AI into business workflows, RAG offers a way to address major risks – including hallucination, stale knowledge, and domain specificity – without having to retrain large models constantly. In this article, we first define what retrieval augmented generation means in both conceptual and technical terms. Then we explore how RAG works: its architecture, components, and data flows. Next, we cover real-world use cases and deployment considerations (such as vector stores, indexing, prompt augmentation, hybrid retrieval, and result ranking). We also examine common challenges and mitigation strategies – such as latency, source trust, relevance ranking, and consistency. Later we discuss advanced designs like agentic RAG, hybrid retrieval across heterogeneous stores (vector, knowledge graphs, full text) and domain adaptation. Finally, we look ahead to evolving trends and how RAG fits into the future of AI systems.

What Is Retrieval Augmented Generation?

Retrieval augmented generation (often called RAG) is a generative AI framework that improves the output of large language models by retrieving and incorporating external, domain-specific knowledge at query time. In contrast to a pure LLM approach – where the model’s responses derive entirely from patterns encoded during pretraining – RAG dynamically augments the input to the model with relevant snippets, documents, or embeddings drawn from external knowledge sources. The result is that the model’s generation is grounded in up-to-date, authoritative content, reducing reliance on outdated information.

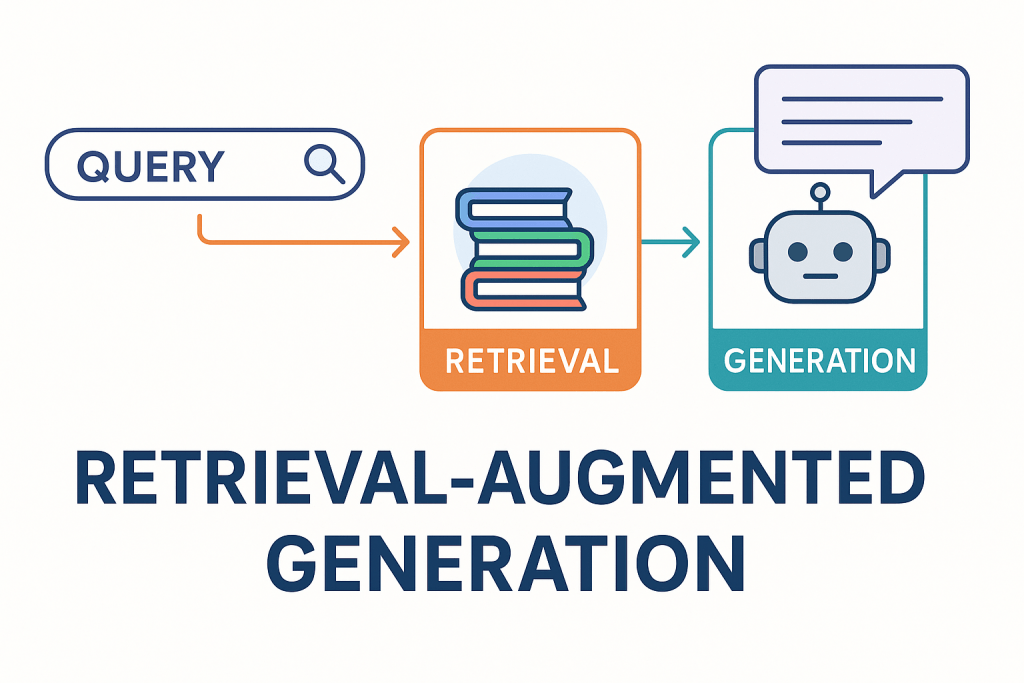

At its core, RAG bridges the strengths of information retrieval with generative modeling. The retrieval component identifies candidate documents or data relevant to a user’s query; the augmentation step combines that retrieved content with the original prompt; the generation step lets the LLM produce a response informed by both the prompt and the retrieved knowledge. By doing this, RAG systems allow generative models to draw on a broader, evolving knowledge base, rather than stay limited to fixed pretrained weights.

RAG emerged to address two fundamental limitations of traditional large language models. First, LLMs have knowledge cutoffs: they are trained on fixed datasets up to a certain date and cannot inherently incorporate new facts beyond that period. Second, LLMs sometimes hallucinate or generate plausible but incorrect statements because they rely on statistical patterns rather than source verification. Retrieval augmented generation helps mitigate both by providing fresh, external context and letting the model reason with grounded evidence.

Because retrieval augmented generation enables domain customization, enterprises can build AI applications that confidently tap into internal knowledge bases, document stores, regulatory texts, or specialized texts. Instead of retraining a massive model for each domain, developers can rely on retrieval + augmentation to tailor responses. As a result, RAG is now often the preferred architecture when generative AI needs to be reliable, auditable, and domain aware.

Architectural Components and Workflow of RAG

To understand how retrieval augmented generation is implemented in practice, it is helpful to break down its architecture into distinct stages: ingestion, indexing, retrieval, augmentation (prompt engineering), generation, and optionally reranking and feedback loops.

Ingestion & Preprocessing

Before using RAG in production, you need a corpus of knowledge to serve as the retrieval base. This corpus can consist of internal documents, knowledge graph content, web pages, databases, or regulatory texts. The ingestion stage involves collecting, cleaning, splitting (chunking), and embedding these documents into a searchable format.

Chunking divides longer documents into manageable segments, often overlapping to preserve context. Each chunk is embedded via a model (such as a transformer encoder) into a high-dimensional vector representation. These vectors are stored in a vector database or vector index. Metadata (document identifiers, source, timestamps) is associated with each chunk so that when results are retrieved, you can trace back to original documents.

Indexing & Embedding

Embedding and indexing are critical. Embedding models convert text chunks into semantic vectors, enabling similarity search. The indexing infrastructure (vector store, approximate nearest neighbors engine, hybrid index) supports efficient similarity matching. Some systems also maintain traditional keyword indexes (e.g. BM25) in parallel for hybrid search.

The quality of embeddings and the design of the index heavily influence retrieval relevance, latency, and scalability. In enterprise scenarios, near-real-time updates, refresh policies, and consistency of embeddings across batches are necessary.

Retrieval (Candidate Selection)

When a user query arrives, it is encoded with the same embedding scheme and used to query the vector index (and possibly keyword indexes) to find the top-k most semantically relevant chunks. Some variants incorporate query expansion or rewriting first to produce better embedding-level searches. Other systems rerank the retrieved candidates using smaller models or heuristics to increase precision.

Hybrid retrieval combines dense (vector) and sparse (keyword) retrieval methods. This is especially beneficial in domains where exact term matching is important, or when embeddings alone might miss precise terms. Combining both retrieval modes helps balance recall and precision.

Augmentation (Prompt Construction)

Once relevant chunks are retrieved, the system “augments” the original user query by injecting the retrieved content as context. The augmented prompt may have a template such as:

QUESTION:

<user query>

CONTEXT:

<retrieved document excerpts or summaries>

INSTRUCTIONS:

Using the context above, answer the question. If the context does not contain the answer, say so.

Prompt engineering is essential. It ensures the model gives weight to retrieved context, handles conflicts, and respects constraints (e.g. do not hallucinate beyond context). Some systems contextualize at multiple levels or interleave retrieval and generation.

Generation

The augmented prompt is fed into the LLM. The model generates a response informed by both the query and retrieved context. Because the LLM sees explicit evidence, its answers tend to be more grounded and less likely to hallucinate.

In some architectures, generation happens in multiple passes. For example, the model may generate a draft answer, then conditionally retrieve further evidence, refine its response, or verify citations.

Reranking, Validation, and Feedback

After initial generation, additional steps may improve quality. A reranking module may score potential responses and pick the most contextually consistent answer. A verification subroutine can cross-check statements against retrieved sources to reject or flag uncertain assertions. In continuous systems, feedback loops allow the model to learn which retrievals or prompt formulations produce better downstream results, refining future retrieval ranking or prompt templates.

In advanced designs, agentic RAG lets the model decide which retrieval strategy to use and when to retrieve again during generation. Some recent research proposes fusing multiple retrieval modalities (vector, graph, full text) within one system, improving both recall and precision across knowledge types (for example, the HetaRAG architecture for hybrid retrieval).

Use Cases and Applications of Retrieval Augmented Generation

Retrieval augmented generation has broad applications wherever generative AI must respond with accuracy, context, and domain-specific knowledge. Below are key use cases.

Enterprise Knowledge Assistants & Chatbots

Organizations deploy chatbots powered by RAG to provide accurate answers from internal knowledge bases: policy documents, support tickets, internal wikis, product documentation. Because RAG grounds answers in enterprise data, the responses are trustworthy and auditable rather than generic or off-base.

Question Answering and Research Tools

RAG systems shine for knowledge-intensive tasks such as legal research, scientific literature summarization, compliance analysis, or market intelligence. Such tools retrieve the most relevant evidence and generate concise answers with citations, enabling users to trust and verify results.

Summarization and Contextual Report Generation

Rather than summarizing entire documents, RAG enables summarization tools to draw from the most relevant segments across a large corpus and then generate coherent reports. This ensures summaries are both accurate and contextually relevant rather than generic.

Decision Support & Conversational Interfaces

In decision support systems, RAG can feed domain evidence into conversations. For instance, a digital assistant could retrieve regulatory clauses, competitor reports, or financial analysis to support advice or scenario analysis within a dialogue.

Technical Troubleshooting & Diagnostics

For enterprise support, RAG can guide troubleshooting by retrieving relevant manuals, prior support tickets, known issue logs, or standard operating procedures, and then generate diagnostic suggestions tailored to the context.

Benefits, Trade-offs, and Challenges

While retrieval augmented generation offers major advantages over pure LLMs, it is not without trade-offs and engineering complexity.

Reduced Hallucinations and Freshness

Because the model has access to real evidence, the tendency to hallucinate is lowered; responses can be updated dynamically by refreshing the retrieval corpus rather than retraining the model. RAG directly addresses the knowledge cutoff problem of static LLMs.

Domain Customization Without Full Retraining

RAG allows domain specialization by customizing the retrieval corpus rather than retraining large models for each domain. This is more efficient and flexible.

Traceability and Source Attribution

Since responses originate from retrieved documents, RAG systems can offer citations or links back to source material. This transparency builds trust and enables verification.

Latency and Scalability

Retrieval and ranking introduce additional latency. High-throughput systems must optimize retrieval speed, index architecture, caching, and prompt strategies to ensure end-to-end performance remains acceptable.

Relevance and Noise

Retrieval may bring context that is tangential, contradictory, or not directly relevant. The model must filter or weight information appropriately. Poor retrieval quality can degrade generation rather than help it.

Retrieval-Generation Misalignment

The LLM may ignore retrieved context or incorrectly combine conflicting evidence. Ensuring the model respects the context is critical. Reranking, prompt constraints, and verification layers help mitigate this.

Source Reliability and Bias

The quality of the retrieval corpus directly affects output. If sources are outdated, biased, or erroneous, RAG will propagate those issues. Risk of combining contradictory sources or mixing fact and fiction must be managed.

Index Upkeep & Real-Time Updates

Keeping the retrieval corpus current and reindexing continuously or in near real time is complex. In dynamic domains, index staleness can undermine the benefits of RAG.

Multimodal and Heterogeneous Data Integration

Many corporations store data in various formats (text, tables, graphs, logs). Integrating different modalities into one retrieval system is challenging. Hybrid retrieval models that combine vector, keyword, relational, and graph methods are an active research area (e.g. HetaRAG).

Best Practices and Design Guidelines for RAG Systems

When designing or evaluating a retrieval augmented generation system, follow these guidelines to balance performance, reliability, and maintainability.

First, invest in high-quality embedding models and retrieval index design. Use domain-tuned embedding models if available, and experiment with hybrid indexing combining dense and sparse retrievals. Test embedding selection strategies, approximate nearest neighbor (ANN) configurations, and reranking layers.

Second, apply chunking strategies that preserve context yet maintain tractable sizes. Overlapping windows, semantic segmentation, and adaptive chunk lengths can help.

Third, design prompt templates that prioritize retrieved context, manage ambiguity, and instruct the model to defer when information is lacking. Use schema and constraints to avoid hallucination.

Fourth, implement validation and feedback loops. Rerank candidate chunks before feeding to the LLM, verify generated output against source content, and loop corrections back into the retrieval module.

Fifth, monitor latency, throughput, and cache popular retrievals or prompt augmentations to speed responses. Evaluate end-to-end performance constantly under realistic loads.

Sixth, manage index updates carefully. In highly dynamic domains, consider incremental indexing, near-real-time embeddings, and consistency checks to avoid staleness.

Seventh, maintain provenance and auditing. Record which documents were retrieved, which prompt templates used them, and how the model processed them. This transparency supports debugging, compliance, and trust.

Eighth, if your data spans multiple formats (text, structured, graph), consider hybrid or multi-modal retrieval designs. Fusing vector, graph, and relational retrieval allows broader coverage and precision.

Ninth, set fallback logic. In cases where retrieval confidence is low, have the system either return “I don’t know” or degrade gracefully. Avoid overconfident hallucinated answers in low-confidence scenarios.

Finally, iteratively evaluate using benchmarks and domain metrics. Use evaluation datasets, question answering benchmarks, human review, and domain relevance scoring to refine retrieval and generation performance over time.

Advanced Topics and Emerging Trends

Retrieval augmented generation is evolving rapidly. Below are cutting-edge directions and research threads to watch.

Hybrid Retrieval Architectures

Rather than rely purely on vector databases, next-generation RAG systems fuse multiple retrieval paradigms. For example, the HetaRAG architecture orchestrates vector indices, full-text search, knowledge graphs, and structured databases across a unified retrieval plane, dynamically routing requests to different backends for higher combined recall and precision.

Agentic and Self-Guided Retrieval

Agentic RAG lets the model dynamically decide which retrieval actions to take during generation. Rather than retrieval only before generation, the model can invoke retrieval stages mid-generation, ask clarifying queries, or switch retrieval strategies adaptively. This can lead to more coherent, context-aware outputs.

Multi-Hop and Reasoning Retrieval

In complex queries requiring layered reasoning (e.g. “What is the timeline of related policy changes across regions?”) retrieval augmented generation can chain multiple retrievals, perform reasoning over intermediate steps, and use the graph of retrieved evidence to inform multi-step outputs.

Retrieval for Multimodal Data

Extending RAG to multimodal contexts (text, images, audio, structured tables) is gaining traction. Systems can embed nontextual pieces into a common semantic representation, enabling retrieval across modalities and generation that references multiple data types.

Domain Adaptation and Continual Learning

RAG systems are being combined with continual learning to adjust embedding spaces or retrieval strategies over time, without retraining the entire large model. This adaptation helps maintain relevance in dynamic domains.

Interpretability and Explainability

Future RAG systems will further emphasize interpretability: tracking which retrieved chunks influenced each part of the answer, generating footnotes or explanations, and providing users control over source weighting or filtering.

Efficiency and Compression

To scale RAG, research is exploring compact embedding models, late interaction architectures, quantization of vector indices, and memory-efficient prompt formats. The goal is to reduce cost, latency, and storage footprint while retaining quality.

-

TQ Data Foundation4

-

Data Governance69

-

Vocabulary Management9

-

Knowledge Graphs44

-

Ontologies15

-

Data Fabric8

-

Metadata Management21

-

Business Glossaries6

-

Semantic Layer12

-

Reference Data Management9

-

Uncategorized2

-

Data Catalogs16

-

Datasets11

-

Taxonomies4

-

News5

-

Policy and Compliance6

-

Life Sciences6

-

Automated Operations6

-

Financial Services10

-

AI Readiness28

-

Podcasts1