AI-Ready Data Foundation

The Problem with Most Agentic AI

The internet is flooded with open-source tools and APIs that promise to let anyone spin up an AI agent in minutes. And they do — but only for low-stakes tasks. These agents can draft emails, summarize articles, or organize your personal calendar. What they can’t do is operate safely or accurately in an enterprise setting.

Without Governance and Context, these DIY Agents:

Hallucinate

Generating confident but incorrect outputs

Leak sensitive data

By asking the wrong questions or sharing more than they should

Act on flawed logic

Because they lack shared context and a true understanding of your business

Ignore policy and compliance

Because they’re not built to respect internal rules or regulations

In short: these agents are smart enough to be dangerous, and brittle enough to break trust.

TopQuadrant Is Your AI-Ready Data Foundation

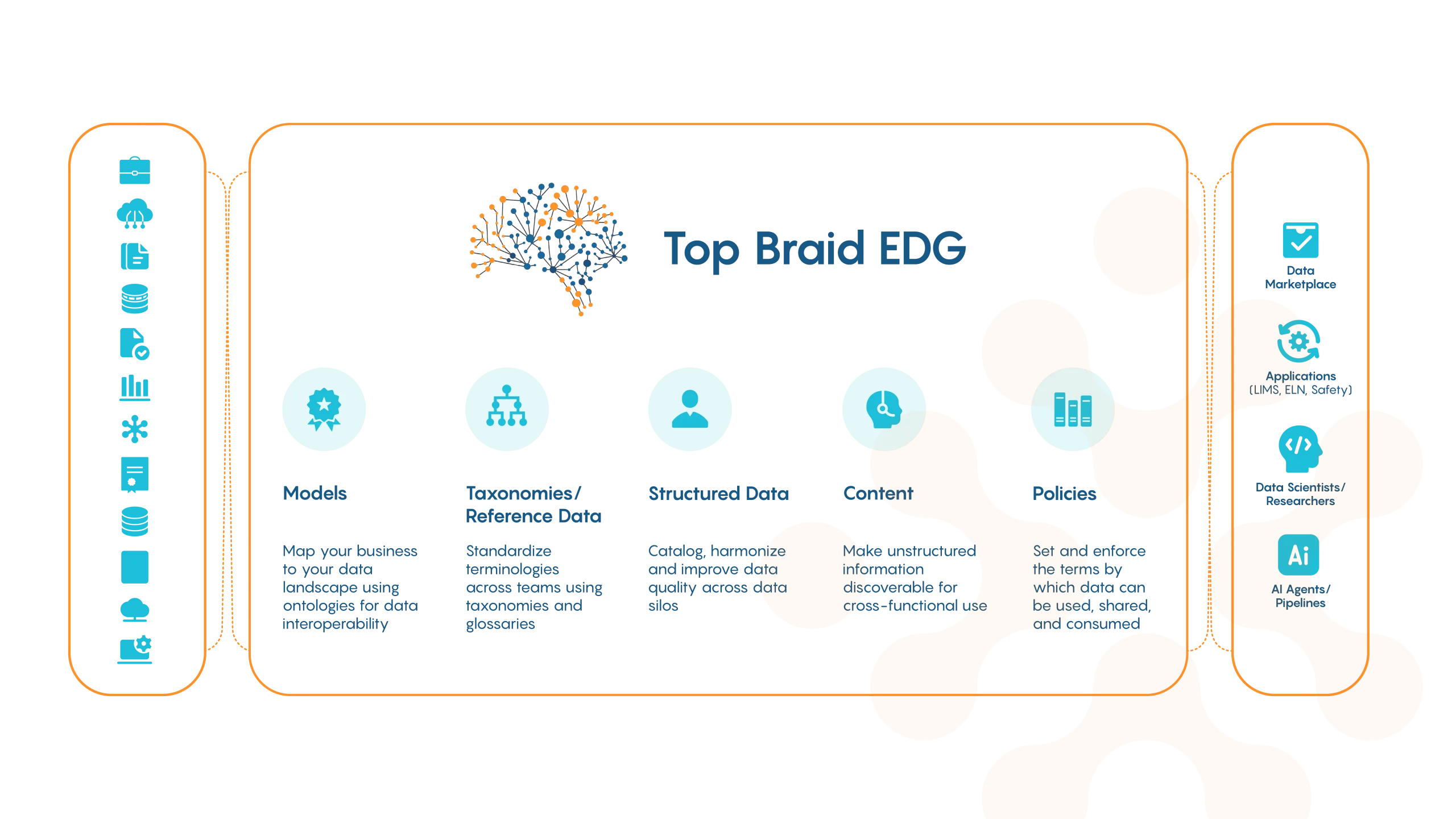

TopQuadrant’s EDG platform is where enterprises build the foundation for trustworthy, contextual, enterprise AI. EDG delivers the core capabilities needed to operationalize safe, scalable, and intelligent agents:

High-quality, AI-ready data as the starting point for intelligent automation

AI governance and policy enforcement embedded in the data layer

Semantic standards to reduce hallucinations and improve explainability

Rich metadata integration to connect knowledge, content, and structured data

Business-aligned models and vocabularies to ensure AI understands and acts with context

With TopQuadrant, you’re not just preparing your data—you’re enabling your entire organization to build, govern, and scale AI that works.

Why the Enterprise Is Different

Unlike consumers, enterprises can’t tolerate errors. When AI touches regulated data, recommends treatments, flags fraud, or generates compliance reports, the cost of being wrong is too high. You can’t afford a black box that can’t explain itself. You need AI that’s consistent, governed, and aligned with how your business thinks.

The Foundation Enterprise AI Needs

Enterprise-grade AI depends on more than just a powerful model. It requires a foundation—a consistent layer of meaning that connects data, content, and context across the organization. This AI data platform must unify:

- Enterprise models that define how the organization views the world

- Terminologies like taxonomies, glossaries, master and reference data

- Unified metadata and unstructured content

- Contextual links between business knowledge and operational data

- Policy frameworks that ensure governance, compliance, and trust

Why Knowledge Graphs Make the Difference

The power behind this foundation lies in knowledge graphs. Unlike traditional data models, knowledge graphs organize information in a way that’s flexible, dynamic, and built for AI. They:

- Link data and meaning across silos

- Enable machines to reason and infer relationships

- Power explainability and traceability for every AI action

- Provide context that improves accuracy and reduces hallucination

- Scale to support both operational decisions and generative AI use cases

Knowledge graphs make your data smarter—and your AI safer and more powerful. They’re the backbone of any effective AI data platform.

Why Today’s Enterprises Need an AI Data Platform

Organizations today operate in a world of constant data growth, across many systems, teams, and geographies. Data is no longer neatly organized in one database or format. Instead, different units may store customer records, product catalogs, transaction logs, regulatory documents, research data or content repositories. Each data source often has its own structure, metadata conventions, and business semantics. As enterprises scale, the complexity and fragmentation of data increase.

In that environment, building reliable AI solutions becomes a challenge. Without a unified data foundation, AI models easily produce inconsistent outputs, struggle with incomplete context, or suffer from unreliable data quality. Analytics initiatives stall because data engineers spend more time cleaning and reconciling data than deriving insights. Governance, compliance, and audit readiness become burdensome when data definitions, lineage, and policies are scattered across silos.

An AI data platform solves these problems by creating a semantic, governed layer that brings consistency, context, and control. It provides a shared foundation where metadata, business definitions, relationships, and lineage are captured. This enables enterprises to treat data not as isolated records but as interconnected assets. With such a foundation, teams can confidently build AI, analytics, reporting, and automation.

For industries under heavy regulation such as financial services, life sciences, or healthcare the value of an AI data platform is especially clear. When data has to support compliance, audits, traceability, privacy, and governance requirements, a governed and contextual data layer becomes not just beneficial but essential.

What an Effective AI Data Platform Looks Like Under the Hood

An AI data platform must do more than store data. At its core, it must provide structure, semantics, governance, and adaptability. The foundation rests on a metadata layer that captures not only technical details such as schema, field names, data types, and lineage, but also business context such as definitions, policies, classifications, and usage restrictions. This layer forms the connective tissue between raw data, business users, compliance, and AI consumers.

On top of this metadata foundation sits a semantic model – a knowledge graph – that defines entities, relationships, business vocabularies, and domain ontologies. Through this model, disparate datasets become part of one unified fabric. Relationships between records emerge, not because data was forced into a rigid schema, but because semantics define how entities relate. This semantic backbone makes data interoperable and machine-understandable.

The platform must also embed governance capabilities. This means access controls, policy enforcement, audit trails, versioning, and data stewardship workflows. It ensures changes to metadata, ontologies, or data structures do not break downstream systems or AI models. In an ideal platform, governance is not an afterthought but a core architectural component built into the data layer rather than tacked on as a separate module.

Finally, scalability and flexibility are vital. Enterprises update or add systems, ingest new data sources, or evolve business logic over time. A robust AI data platform grows with the business. It supports evolving ontologies, new data domains, changing regulations, and increasing demands for data consumption from analytics, machine learning, or intelligent applications.

From Data to Insight: Typical AI Data Platform Workflows

First, organizations ingest data from multiple sources including structured databases, CSV files, content repositories, APIs, and external data feeds. Then the data is mapped into a metadata layer where technical and business metadata is captured – schema definitions, field names, business definitions, classifications, data owner information, and lineage.

Next the metadata is aligned with a semantic model defined by a knowledge graph ontology. Entities and relationships are created to reflect business domains, providing context and meaning to the raw data.

Once data is semantically modeled, governance rules and policies are applied – access controls, stewardship roles, audit trails, compliance constraints, and versioning.

With governance and semantics in place, data becomes AI-ready. Analytics, reporting, machine learning, and intelligent agent applications can query the graph, explore relationships, and retrieve consistent, context-rich results. Over time, as new data sources are added or business logic evolves, the semantic model and metadata layer are updated. The knowledge graph adapts without disrupting downstream applications, preserving consistency and continuity.

This workflow ensures that data remains trustworthy, AI outputs are explainable, governance is embedded, and the platform scales as the enterprise grows.

Why TopQuadrant’s AI Data Platform Is Different

TopQuadrant’s AI data platform stands out because it combines semantic sophistication with enterprise-grade governance. Rather than treating data as isolated silos or forcing legacy systems into rigid schemas, TopQuadrant builds a semantic, graph-based layer that brings metadata, business definitions, relationships, context, and governance into one unified fabric.

Every data asset becomes part of a shared knowledge graph. Business users, data stewards and governance teams can collaborate on definitions, policies, and metadata. AI and analytics teams can consume data that is consistent, context-aware, and auditable. Compliance and audit teams have visibility into data lineage, policy enforcement, and usage history.

Because the platform is built on open standards, enterprises avoid vendor lock-in and retain flexibility. As business needs shift, new data sources emerge, or regulatory requirements evolve, the platform adapts. The semantic model can be extended, metadata updated, and governance rules enforced – all without breaking downstream systems.

With this kind of foundation, enterprises are not merely preparing for AI – they are building a resilient, future-proof data architecture. What begins as a foundation for analytics or automation becomes the backbone for intelligent agents, generative AI, regulatory compliance, and data-driven transformation.

Related Resources

From Soft Rock to Yacht Rock: How AI and Knowledge Graphs Could Have Streamlined a Genre’s Reclassification

Exploring the genre’s evolution, we consider how AI could have streamlined its reclassification—identifying traits, linking artists, and automating playlists.

TopQuadrant Launches TopBraid EDG 8.2: Knowledge Graphs as the Foundation for Secure AI

TopBraid EDG 8.2 empowers organizations to unlock insights from their content, enabling them to build secure, compliant AI applications.

Unlock the Power of Knowledge Graphs and LLMs: The Ultimate Synergy for Enterprise Data

A practical overview of how LLMs and knowledge graphs work together—covering KG creation, governance, RAG, and enterprise GenAI pipelines.

The Road to AI Success: Avoiding Common Pitfalls in Enterprise AI Pilots

Enterprise AI pilots are everywhere—but many fail. Learn how data modeling, ontologies, and governance make GenAI work at scale.

From Hype to Reality: How Ontologies Are Paving the Way for Enterprise AI

Resource Hub Search Table of Contents < All Topics Main Ontologies From Hype to Reality: How Ontologies Are Paving the Way for Enterprise AI Print